Why Use Queues for Large Data Imports?

Processing large Excel files synchronously can lead to several problems including PHP execution timeouts, memory exhaustion, and blocking the main application thread. Laravel queues solve these issues by:

- Processing data in the background without blocking user interactions

- Breaking large datasets into manageable chunks

- Providing retry mechanisms for failed imports

- Enabling horizontal scaling with multiple queue workers

- Offering better error handling and monitoring capabilities

Prerequisites and Setup

Installing Required Packages

First, install the Laravel Excel package and ensure your queue system is properly configured:

composer require maatwebsite/excel

php artisan vendor:publish --provider="Maatwebsite\Excel\ExcelServiceProvider" --tag=config

Queue Configuration

Configure your queue driver in the `.env` file. For production environments, consider using Redis or database queues:

# For Redis (recommended for production)

QUEUE_CONNECTION=redis

# For Database (good for development/small scale)

QUEUE_CONNECTION=database

# For testing purposes

QUEUE_CONNECTION=sync

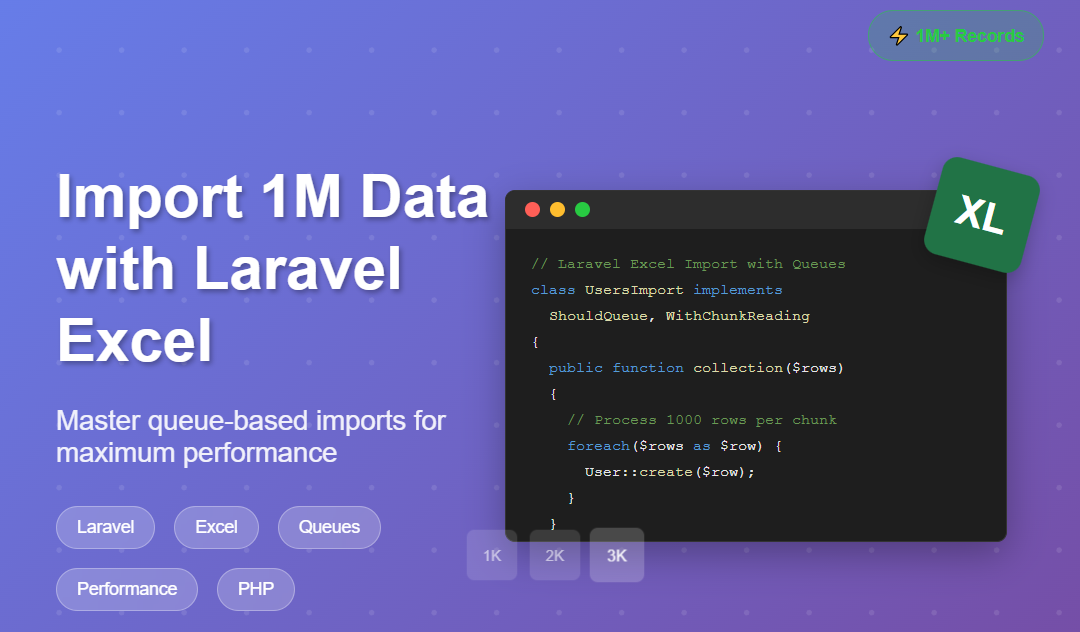

Creating the Import Class

Basic Import Class Structure

Create an import class that implements Laravel Excel's import interfaces with queue support:

$row['name'],

'email' => $row['email'],

'password' => Hash::make($row['password']),

'phone' => $row['phone'] ?? null,

'address' => $row['address'] ?? null,

]);

}

}

public function chunkSize(): int

{

return 1000; // Process 1000 rows at a time

}

public function rules(): array

{

return [

'*.name' => 'required|string|max:255',

'*.email' => 'required|email|unique:users,email',

'*.password' => 'required|string|min:8',

];

}

}

Advanced Import with Batch Processing

Optimized Import Class for Large Datasets

For handling 1 million records efficiently, use batch inserts and implement proper error handling:

$row['name'],

'email' => $row['email'],

'password' => Hash::make($row['password']),

'phone' => $row['phone'] ?? null,

'address' => $row['address'] ?? null,

'created_at' => now(),

'updated_at' => now(),

];

// Insert in batches

if (count($batchData) >= $batchSize) {

$this->insertBatch($batchData);

$batchData = [];

}

}

// Insert remaining records

if (!empty($batchData)) {

$this->insertBatch($batchData);

}

}

private function insertBatch(array $data)

{

try {

DB::table('users')->insert($data);

} catch (Throwable $e) {

// Log error and handle individually

$this->handleFailedBatch($data, $e);

}

}

private function handleFailedBatch(array $data, Throwable $e)

{

// Log the error

\Log::error('Batch insert failed: ' . $e->getMessage());

// Try inserting records individually

foreach ($data as $record) {

try {

DB::table('users')->insert($record);

} catch (Throwable $individualError) {

\Log::error('Individual record failed: ' . $individualError->getMessage(), $record);

}

}

}

public function chunkSize(): int

{

return 1000;

}

public function rules(): array

{

return [

'*.name' => 'required|string|max:255',

'*.email' => 'required|email',

'*.password' => 'required|string|min:8',

];

}

}

Controller Implementation

Import Controller with Progress Tracking

Create a controller to handle file uploads and track import progress:

all(), [

'file' => 'required|file|mimes:xlsx,xls,csv|max:51200', // 50MB max

]);

if ($validator->fails()) {

return response()->json([

'status' => 'error',

'message' => 'Invalid file format or size',

'errors' => $validator->errors()

], 400);

}

try {

$file = $request->file('file');

$importId = uniqid('import_');

// Store import status

Cache::put("import_status_{$importId}", [

'status' => 'processing',

'progress' => 0,

'total_rows' => 0,

'processed_rows' => 0,

'started_at' => now(),

], 3600); // Cache for 1 hour

// Queue the import

Excel::queueImport(new OptimizedUsersImport(), $file)->chain([

new \App\Jobs\ImportCompletedJob($importId)

]);

return response()->json([

'status' => 'success',

'message' => 'Import started successfully',

'import_id' => $importId

]);

} catch (\Exception $e) {

return response()->json([

'status' => 'error',

'message' => 'Import failed: ' . $e->getMessage()

], 500);

}

}

public function getImportStatus($importId)

{

$status = Cache::get("import_status_{$importId}");

if (!$status) {

return response()->json([

'status' => 'error',

'message' => 'Import not found'

], 404);

}

return response()->json($status);

}

}

Job for Import Completion

Handling Import Completion

Create a job to handle post-import tasks:

importId = $importId;

}

public function handle()

{

// Update import status

$status = Cache::get("import_status_{$this->importId}", []);

$status['status'] = 'completed';

$status['completed_at'] = now();

Cache::put("import_status_{$this->importId}", $status, 3600);

// Send notification, update database, etc.

// You can add additional logic here like sending emails

}

}

Frontend Integration

File Upload Form with Progress Tracking

Create a user-friendly interface for file uploads with real-time progress updates:

<!DOCTYPE html>

<html>

<head>

<title>Large Data Import</title>

<meta name="csrf-token" content="{{ csrf_token() }}">

<script src="https://cdn.jsdelivr.net/npm/axios/dist/axios.min.js"></script>

</head>

<body>

<div class="import-container">

<h2>Import Large Dataset</h2>

<form id="importForm" enctype="multipart/form-data">

<div class="form-group">

<label for="file">Select Excel/CSV File:</label>

<input type="file" id="file" name="file" accept=".xlsx,.xls,.csv" required>

</div>

<button type="submit" id="importBtn">Start Import</button>

</form>

<div id="progressContainer" style="display: none;">

<h3>Import Progress</h3>

<div class="progress-bar">

<div id="progressBar" style="width: 0%; background: #4CAF50; height: 20px;"></div>

</div>

<p id="progressText">Starting import...</p>

</div>

<div id="result"></div>

</div>

<script>

// Add the JavaScript code here (see next section)

</script>

</body>

</html>

JavaScript for Progress Tracking

document.addEventListener('DOMContentLoaded', function() {

const form = document.getElementById('importForm');

const progressContainer = document.getElementById('progressContainer');

const progressBar = document.getElementById('progressBar');

const progressText = document.getElementById('progressText');

const result = document.getElementById('result');

form.addEventListener('submit', async function(e) {

e.preventDefault();

const formData = new FormData();

const fileInput = document.getElementById('file');

formData.append('file', fileInput.files[0]);

try {

// Start import

const response = await axios.post('/api/import', formData, {

headers: {

'Content-Type': 'multipart/form-data',

'X-CSRF-TOKEN': document.querySelector('meta[name="csrf-token"]').getAttribute('content')

}

});

if (response.data.status === 'success') {

const importId = response.data.import_id;

progressContainer.style.display = 'block';

// Start polling for progress

pollProgress(importId);

}

} catch (error) {

result.innerHTML = `Error: ${error.response?.data?.message || error.message}`;

}

});

async function pollProgress(importId) {

try {

const response = await axios.get(`/api/import-status/${importId}`);

const status = response.data;

// Update progress

const progress = status.total_rows > 0 ? (status.processed_rows / status.total_rows) * 100 : 0;

progressBar.style.width = progress + '%';

progressText.textContent = `Processed ${status.processed_rows} of ${status.total_rows} records (${Math.round(progress)}%)`;

if (status.status === 'completed') {

progressText.textContent = 'Import completed successfully!';

result.innerHTML = 'Import completed successfully!';

} else if (status.status === 'failed') {

progressText.textContent = 'Import failed';

result.innerHTML = 'Import failed. Please try again.';

} else {

// Continue polling

setTimeout(() => pollProgress(importId), 2000);

}

} catch (error) {

console.error('Error polling progress:', error);

setTimeout(() => pollProgress(importId), 5000); // Retry after 5 seconds

}

}

});

Performance Optimization Tips

Database Optimization

Optimize your database for large imports with proper indexing and configuration:

-- Add indexes for frequently queried columns

CREATE INDEX idx_users_email ON users(email);

CREATE INDEX idx_users_created_at ON users(created_at);

-- Optimize MySQL for bulk imports (add to my.cnf)

-- innodb_buffer_pool_size = 1G

-- innodb_log_file_size = 256M

-- bulk_insert_buffer_size = 256M

Memory and Queue Optimization

Configure your queue workers and PHP settings for optimal performance:

// In config/queue.php

'connections' => [

'redis' => [

'driver' => 'redis',

'connection' => 'default',

'queue' => env('REDIS_QUEUE', 'default'),

'retry_after' => 3600, // 1 hour

'block_for' => null,

'after_commit' => false,

],

],

// Run multiple queue workers for parallel processing

// php artisan queue:work --queue=default --tries=3 --timeout=3600

Error Handling and Monitoring

Comprehensive Error Handling

Implement robust error handling to manage failed imports gracefully:

// Add to your import class

public function onError(Throwable $e)

{

// Log detailed error information

\Log::error('Import failed', [

'error' => $e->getMessage(),

'file' => $e->getFile(),

'line' => $e->getLine(),

'trace' => $e->getTraceAsString()

]);

}

public function onFailure(Failure ...$failures)

{

foreach ($failures as $failure) {

\Log::error('Row failed validation', [

'row' => $failure->row(),

'errors' => $failure->errors(),

'values' => $failure->values()

]);

}

}

Testing Large Imports

Unit Test Example

create('users.xlsx', 1024); // 1MB fake file

$response = $this->postJson('/api/import', [

'file' => $file

]);

$response->assertStatus(200);

$response->assertJson([

'status' => 'success'

]);

Excel::assertQueued('users.xlsx', OptimizedUsersImport::class);

}

}

Conclusion

Successfully importing 1 million records using Laravel Excel and queues requires careful planning and optimization. Key takeaways include:

- Use queue jobs to prevent timeouts and improve user experience

- Implement chunking to process data in manageable batches

- Optimize database configuration for bulk operations

- Include comprehensive error handling and monitoring

- Provide real-time progress feedback to users

- Test thoroughly with realistic data volumes

By following this guide, you can efficiently handle large-scale data imports while maintaining application performance and providing a smooth user experience. Remember to monitor your queue workers and database performance during large imports to ensure optimal results.